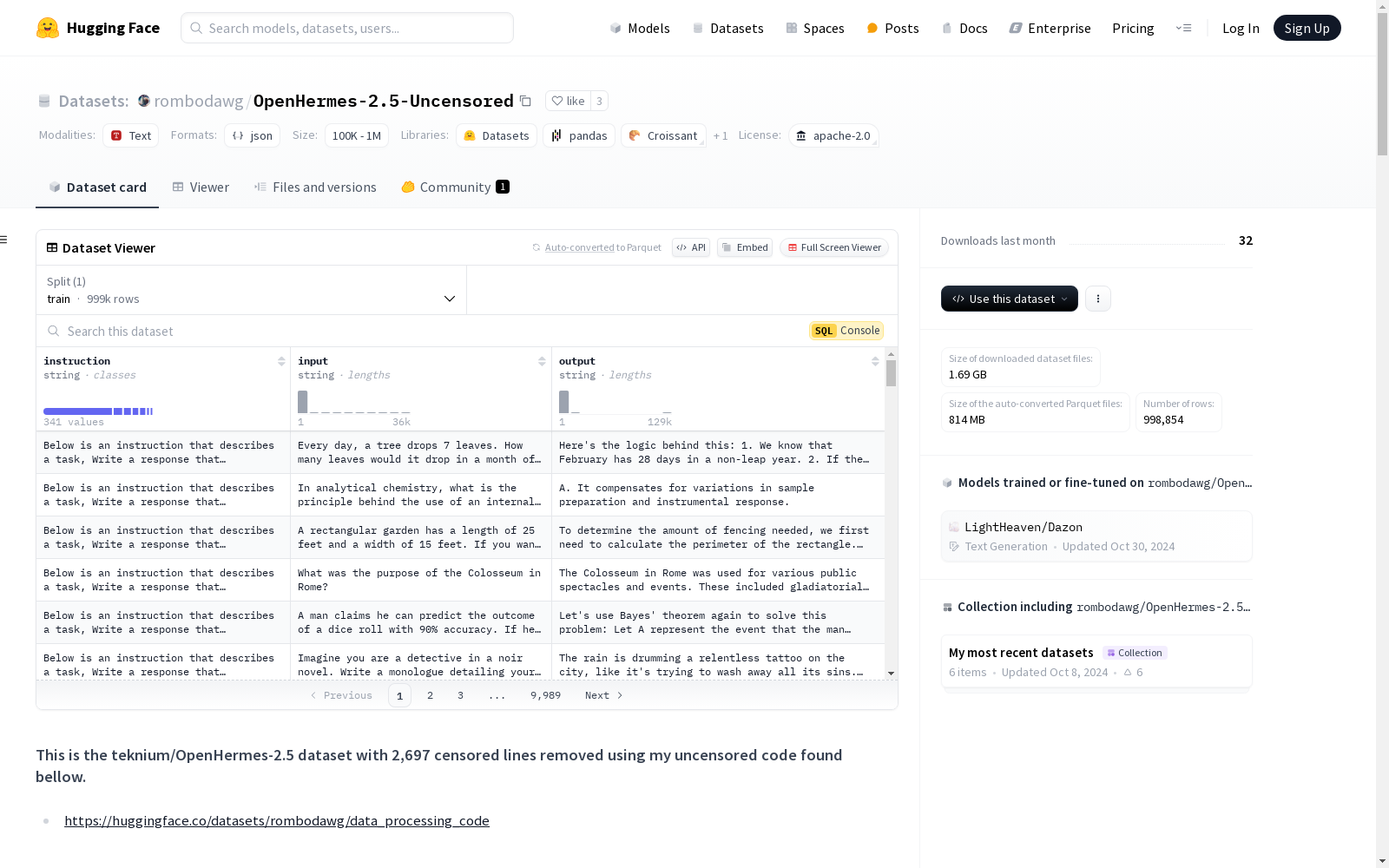

OpenHermes-2.5-Uncensored|自然语言处理数据集|数据集训练数据集

收藏数据集卡片

数据集描述

Open Hermes 2.5 数据集是 Open Hermes 1 数据集的延续,规模更大、多样性更高、质量更高,主要包含合成生成的指令和聊天样本,总量达到 100 万。

数据集来源

该数据集整合了多个开源数据集和自定义生成的合成数据集,包括:

- Airoboros 2.2

- CamelAI 领域专家数据集(物理、数学、化学和生物)

- ChatBot Arena(仅 GPT-4)

- Collective Cognition(2023-09-22)

- CoT Alpaca GPT4

- Evol Instruct 70K 和 140K

- Glaive Code Assistant

- GPT4-LLM

- GPTeacher

- Medical Tasks

- MetaMath 40k

- SlimOrca 550K

- Platypus

- ShareGPT(仅 GPT-4)

- Unnatural Instructions GPT4

数据集结构

数据集遵循 sharegpt 结构,包含一系列字典,每个字典包含一个名为 conversations 的新列表。每个对话轮次包含两个字典,一个 "from" 字段表示该轮次的角色,一个 "value" 字段包含实际文本。

示例:

json { "conversations": [ { "from": "system", "value": "" }, { "from": "human", "value": "Every day, a tree drops 7 leaves. How many leaves would it drop in a month of February in a non-leap year? Include your logic." }, { "from": "gpt", "value": "Heres the logic behind this:

- We know that February has 28 days in a non-leap year.

- If the tree drops 7 leaves every day, then over the course of February, it would drop: Leaves dropped in February = Leaves per day * Days in February = 7 leaves * 28 days = 196 leaves

So, the tree would drop 196 leaves in February in a non-leap year." } ], "source": "airoboros2.2", "category": "orca" }

引用

bibtex @misc{OpenHermes 2.5, title = {OpenHermes 2.5: An Open Dataset of Synthetic Data for Generalist LLM Assistants}, author = {Teknium}, year = {2023}, publisher = {HuggingFace}, url = {https://huggingface.co/datasets/teknium/OpenHermes-2.5} }