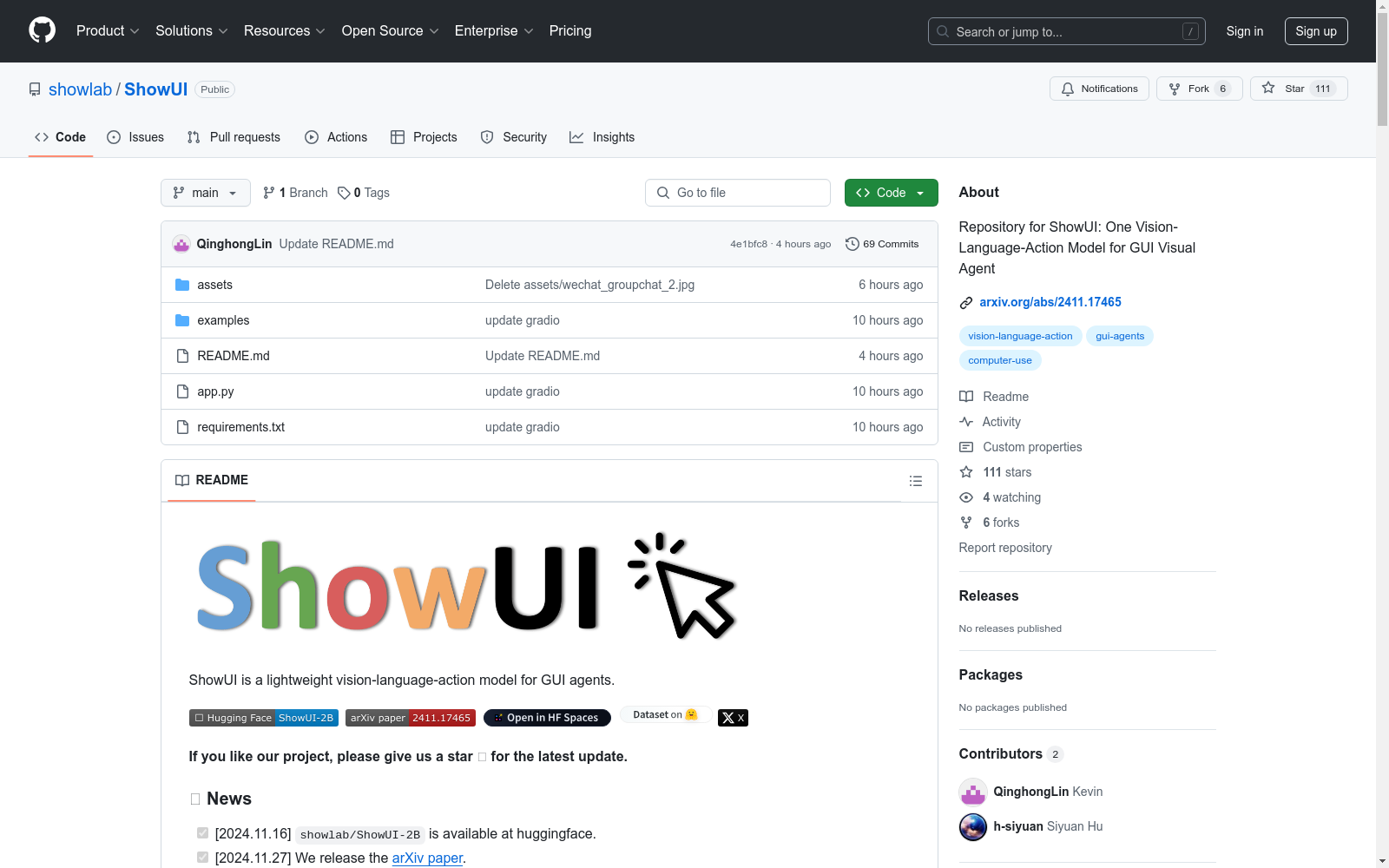

ShowUI-desktop-8K|GUI视觉代理数据集|UI定位数据集

收藏ShowUI 数据集概述

数据集简介

ShowUI 是一个轻量级的视觉-语言-动作模型,专门用于 GUI 代理。

数据集发布信息

- 发布日期: 2024.11.27

- 数据集名称: ShowUI-desktop-8K

- 数据集链接: ShowUI-desktop-8K

数据集内容

- UI Grounding 数据: 包含用于 UI 元素定位的数据。

- UI Navigation 数据: 包含用于 UI 导航的数据。

数据集使用示例

UI Grounding

python img_url = examples/web_dbd7514b-9ca3-40cd-b09a-990f7b955da1.png query = "Nahant"

_SYSTEM = "Based on the screenshot of the page, I give a text description and you give its corresponding location. The coordinate represents a clickable location [x, y] for an element, which is a relative coordinate on the screenshot, scaled from 0 to 1." messages = [ { "role": "user", "content": [ {"type": "text", "text": _SYSTEM}, {"type": "image", "image": img_url, "min_pixels": min_pixels, "max_pixels": max_pixels}, {"type": "text", "text": query} ], } ]

text = processor.apply_chat_template( messages, tokenize=False, add_generation_prompt=True, ) image_inputs, video_inputs = process_vision_info(messages) inputs = processor( text=[text], images=image_inputs, videos=video_inputs, padding=True, return_tensors="pt", ) inputs = inputs.to("cuda")

generated_ids = model.generate(**inputs, max_new_tokens=128) generated_ids_trimmed = [ out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids) ] output_text = processor.batch_decode( generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False )[0]

click_xy = ast.literal_eval(output_text)

[0.73, 0.21]

draw_point(img_url, click_xy, 10)

UI Navigation

python img_url = examples/chrome.png split=web system_prompt = _NAV_SYSTEM.format(_APP=split, _ACTION_SPACE=action_map[split]) query = "Search the weather for the New York city."

messages = [ { "role": "user", "content": [ {"type": "text", "text": system_prompt}, {"type": "image", "image": img_url, "min_pixels": min_pixels, "max_pixels": max_pixels}, {"type": "text", "text": query} ], } ]

text = processor.apply_chat_template( messages, tokenize=False, add_generation_prompt=True, ) image_inputs, video_inputs = process_vision_info(messages) inputs = processor( text=[text], images=image_inputs, videos=video_inputs, padding=True, return_tensors="pt", ) inputs = inputs.to("cuda")

generated_ids = model.generate(**inputs, max_new_tokens=128) generated_ids_trimmed = [ out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids) ] output_text = processor.batch_decode( generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False )[0]

print(output_text)

{action: CLICK, value: None, position: [0.49, 0.42]},

{action: INPUT, value: weather for New York city, position: [0.49, 0.42]},

{action: ENTER, value: None, position: None}

引用信息

@misc{lin2024showui, title={ShowUI: One Vision-Language-Action Model for GUI Visual Agent}, author={Kevin Qinghong Lin and Linjie Li and Difei Gao and Zhengyuan Yang and Shiwei Wu and Zechen Bai and Weixian Lei and Lijuan Wang and Mike Zheng Shou}, year={2024}, eprint={2411.17465}, archivePrefix={arXiv}, primaryClass={cs.CV}, url={https://arxiv.org/abs/2411.17465}, }