deepmind/pg19|语言建模数据集|长范围序列建模数据集

收藏数据集概述

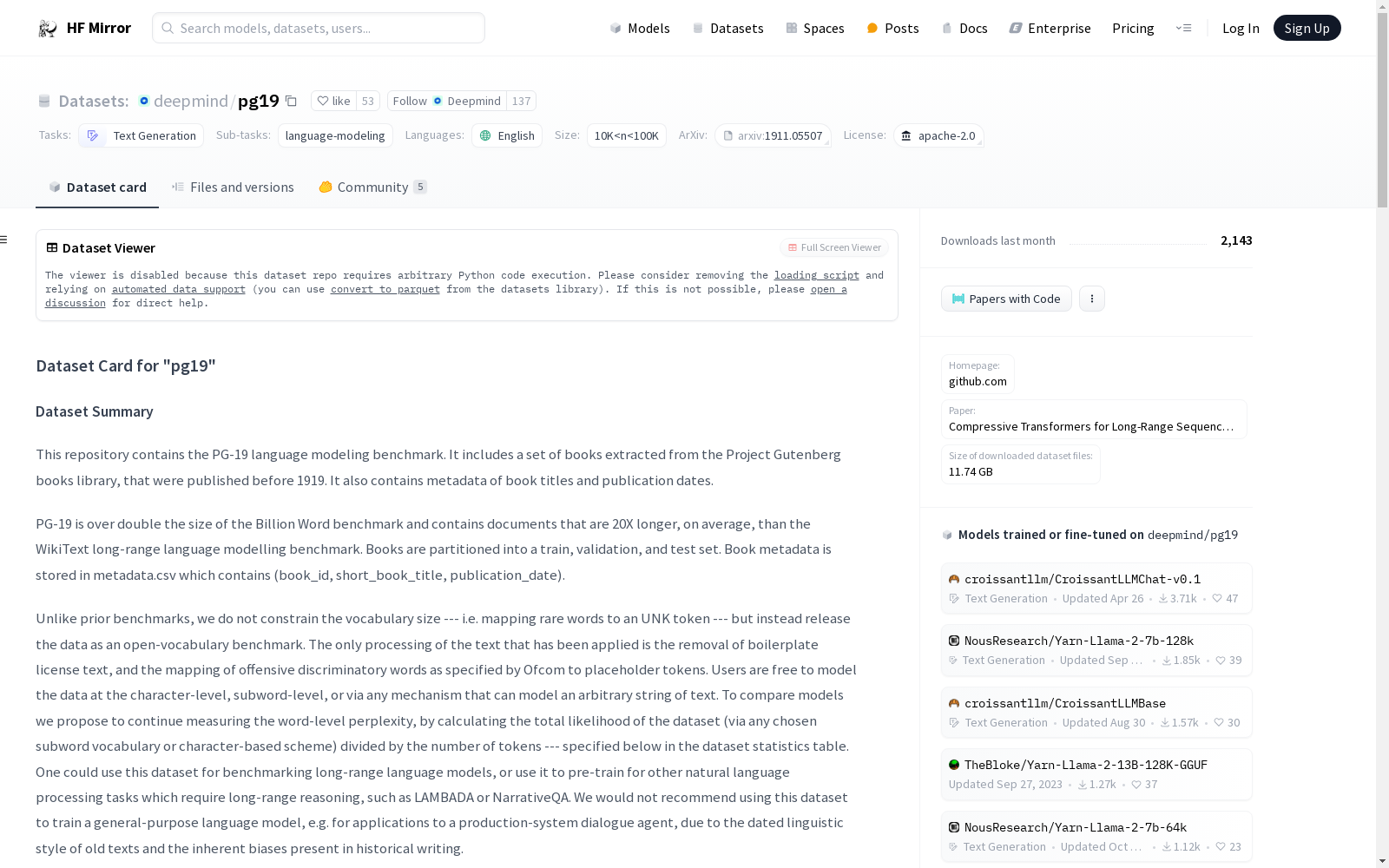

数据集名称: PG-19

数据集描述: PG-19是一个语言建模基准数据集,包含从Project Gutenberg图书库中提取的出版于1919年之前的书籍。数据集包含书籍标题和出版日期等元数据。PG-19的规模是Billion Word基准的两倍以上,平均文档长度是WikiText长距离语言建模基准的20倍。

数据集特点:

- 语言: 英语(en)

- 许可证: Apache-2.0

- 多语言性: 单语(monolingual)

- 大小分类: 10K<n<100K

- 源数据集: 原始(original)

- 任务类别: 文本生成(text-generation)

- 任务ID: 语言建模(language-modeling)

- 数据集信息:

- 特征:

short_book_title: 字符串类型publication_date: 整数类型(int32)url: 字符串类型text: 字符串类型

- 数据分割:

- 训练集(train):28602个样本,11453688452字节

- 验证集(validation):50个样本,17402295字节

- 测试集(test):100个样本,40482852字节

- 下载大小: 11.74 GB

- 数据集大小: 11.51 GB

- 特征:

数据集用途: 该数据集适用于基准测试长距离语言模型,或用于预训练其他需要长距离推理的自然语言处理任务,如LAMBADA或NarrativeQA。不推荐用于训练通用目的的语言模型,如生产系统对话代理,由于旧文本的语言风格和历史写作中固有的偏见。

许可证信息: 数据集根据Apache License, Version 2.0授权。

引用信息:

@article{raecompressive2019, author = {Rae, Jack W and Potapenko, Anna and Jayakumar, Siddhant M and Hillier, Chloe and Lillicrap, Timothy P}, title = {Compressive Transformers for Long-Range Sequence Modelling}, journal = {arXiv preprint}, url = {https://arxiv.org/abs/1911.05507}, year = {2019}, }

MultiTalk

MultiTalk数据集是由韩国科学技术院创建,包含超过420小时的2D视频,涵盖20种不同语言,旨在解决多语言环境下3D说话头生成的问题。该数据集通过自动化管道从YouTube收集,每段视频都配有语言标签和伪转录,部分视频还包含伪3D网格顶点。数据集的创建过程包括视频收集、主动说话者验证和正面人脸验证,确保数据质量。MultiTalk数据集的应用领域主要集中在提升多语言3D说话头生成的准确性和表现力,通过引入语言特定风格嵌入,使模型能够捕捉每种语言独特的嘴部运动。

arXiv 收录

OMIM (Online Mendelian Inheritance in Man)

OMIM是一个包含人类基因和遗传疾病信息的在线数据库。它提供了详细的遗传疾病描述、基因定位、相关文献和临床信息。数据集内容包括疾病名称、基因名称、基因定位、遗传模式、临床特征、相关文献引用等。

www.omim.org 收录

全国 1∶200 000 数字地质图(公开版)空间数据库

As the only one of its kind, China National Digital Geological Map (Public Version at 1∶200 000 scale) Spatial Database (CNDGM-PVSD) is based on China' s former nationwide measured results of regional geological survey at 1∶200 000 scale, and is also one of the nationwide basic geosciences spatial databases jointly accomplished by multiple organizations of China. Spatially, it embraces 1 163 geological map-sheets (at scale 1: 200 000) in both formats of MapGIS and ArcGIS, covering 72% of China's whole territory with a total data volume of 90 GB. Its main sources is from 1∶200 000 regional geological survey reports, geological maps, and mineral resources maps with an original time span from mid-1950s to early 1990s. Approved by the State's related agencies, it meets all the related technical qualification requirements and standards issued by China Geological Survey in data integrity, logic consistency, location acc racy, attribution fineness, and collation precision, and is hence of excellent and reliable quality. The CNDGM-PVSD is an important component of China' s national spatial database categories, serving as a spatial digital platform for the information construction of the State's national economy, and providing informationbackbones to the national and provincial economic planning, geohazard monitoring, geological survey, mineral resources exploration as well as macro decision-making.

DataCite Commons 收录

UniProt

UniProt(Universal Protein Resource)是全球公认的蛋白质序列与功能信息权威数据库,由欧洲生物信息学研究所(EBI)、瑞士生物信息学研究所(SIB)和美国蛋白质信息资源中心(PIR)联合运营。该数据库以其广度和深度兼备的蛋白质信息资源闻名,整合了实验验证的高质量数据与大规模预测的自动注释内容,涵盖从分子序列、结构到功能的全面信息。UniProt核心包括注释详尽的UniProtKB知识库(分为人工校验的Swiss-Prot和自动生成的TrEMBL),以及支持高效序列聚类分析的UniRef和全局蛋白质序列归档的UniParc。其卓越的数据质量和多样化的检索工具,为基础研究和药物研发提供了无可替代的支持,成为生物学研究中不可或缺的资源。

www.uniprot.org 收录

CBIS-DDSM

该数据集用于训练乳腺癌分类器或分割模型,包含3103张乳腺X光片,其中465张有多个异常。数据集分为训练集和测试集,还包括3568张裁剪的乳腺X光片和对应的掩码。

github 收录