---

title: 'Phi-1 Model Dataset'

date: '2023-07-03'

license: cc-by-nc-sa-3.0

---

## Dataset Description

- **Homepage:** [teleprint.me](https://teleprint.me)

- **Repository:** [phi-1](https://huggingface.co/datasets/teleprint-me/phi-1)

- **Paper:** [2306.11644v1](https://arxiv.org/abs/2306.11644v1)

- **Leaderboard:** [Link to the leaderboard]

- **Point of Contact:** [aberrio@teleprint.me](aberrio@teleprint.me)

### Dataset Summary

This dataset is created for training the phi-1 model, based on the paper

"Textbooks are All You Need". It contains high-quality data derived from various

textbooks, transformed and synthesized using OpenAI's GPT-3.5 and GPT-4 models.

For optimal results, it is recommended to train models with the following

parameters and sequence lengths:

- For a model with 350M parameters, use a sequence length of 2048.

- For a model with 700M parameters, use a sequence length of 4096.

- For a model with 1.3B parameters, use a sequence length of 8096.

Please note that the dataset is currently in its initial phase of planning and

collection. The process involves preparing the data, extracting it, formatting

it, chunking it, and preparing it for synthesis. Scripts for preparing and

processing the data for the model will be developed. Once the data is generated,

it will undergo a review and revision process to ensure its quality and

relevance.

These recommendations and notes are based on the dataset creator's initial plans

and may be subject to change as the project progresses.

**NOTE**: Due to the nature of this dataset, it cannot be released without

obtaining permissions from the respective publishers and/or authors. If you are

an author or publisher and have any concerns about this repository, please feel

free to email me.

If you are an author or publisher and would like to grant permission for the use

of your work, your support would be greatly appreciated. Please note that in

order for the dataset to be released, permissions would need to be unanimous

from all involved parties.

In the absence of such permissions, I will respect the copyrights of the

copyrighted materials and exercise my right to Fair Use with my own physical

property for personal use.

**This dataset is NOT intended for commercial purposes**. Its primary purpose is

for research in machine learning and AI software development. If a model is

created using this dataset, it will be shared under the same license.

Any proceeds derived from donations will be primarily used for the development

of the dataset and the model.

### Supported Tasks and Leaderboards

- `text-generation`: The dataset can be used to train a model for chat-like text

generation, more specifically, for generating explanations and examples in the

context of arithmetic, algebra, geometry, trigonometry, calculus, algorithms

and data structures, design patterns, and the python programming language.

### Languages

The text in the dataset is in English.

## Dataset Structure

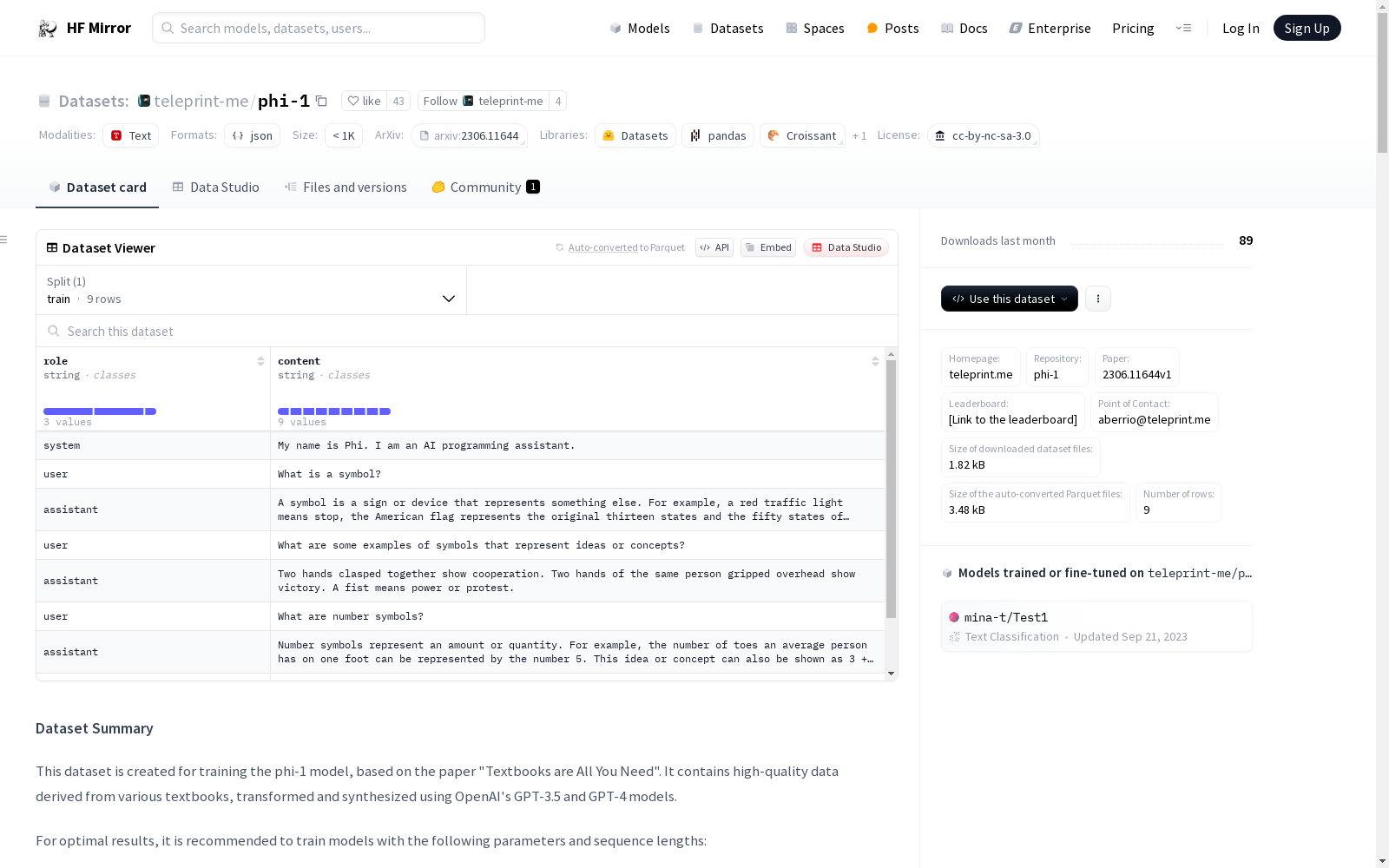

### Data Instances

A data instance consists of a dialogue between a user and an assistant,

discussing a topic in arithmetic, algebra, geometry, trigonometry, calculus,

algorithms and data structures, design patterns, or the Python programming

language. The dialogue is structured as a list of turns, each turn containing

the role ("user" or "assistant") and the content of the turn.

### Data Fields

- `role`: a string indicating the role of the speaker in the dialogue ("system",

"user", "assistant", "function").

- `content`: a string containing the content of the speaker's turn in the

dialogue.

### Data Splits

The dataset is split into a training set, a validation set, and a test set. The

exact sizes and proportions of these splits will depend on the final size of the

dataset.

## Dataset Creation

### Curation Rationale

The dataset is being created to train a model capable of generating explanations

and examples in the context of various mathematical and computer science topics.

The goal is to create an AI assistant that can provide clear, accurate, and

pedagogically sound responses to user queries on these topics.

### Source Data

#### Initial Data Collection and Normalization

The data is collected from a variety of textbooks covering arithmetic, algebra,

geometry, trigonometry, calculus, algorithms and data structures, design

patterns, and the Python programming language. The textbooks used include:

- Barron's Arithmetic The Easy Way Fourth Edition

- Blitzer Introductory Algebra for College Students Fifth Edition

- McDougal Littell Geometry

- Blitzer Intermediate Algebra for College Students 5th Edition

- Trigonometry Sixth Edition

- Pearson College Algebra Fourth Edition

- Hughes-Hallet Applied Calculus 5th Edition

- CLRS Introduction to Algorithms Third Edition

In addition to the textbooks, the dataset also includes material from the

following online resources:

- [C reference](https://en.cppreference.com/w/c)

- [Cpp reference](https://en.cppreference.com/w/cpp)

- [Python Standard Library](https://docs.python.org/3/)

These resources provide up-to-date information and examples for the C, C++, and

Python programming languages. The creators of the Cppreference site also provide

[archives](https://en.cppreference.com/w/Cppreference:Archives) of their site

for offline use. Code samples synthesized by OpenAI's GPT models, curated by the

dataset creator, are also included in the dataset.

**Note:** The creator of this dataset owns physical copies of all the textbooks

listed above. The data from these sources are transformed into a dialogue format

using OpenAI's GPT-3.5 and GPT-4 models. The resulting dialogues are then used

as the training data for the phi-1 model. This dataset does not include the full

content of the source textbooks. Instead, it consists of transformations and

syntheses of the original content. Anyone who wants access to the full original

content should purchase or otherwise legally access the textbooks themselves.

#### Who are the source language producers?

The original language data was created by a variety of authors and educators,

who wrote the textbooks and other materials used as sources for this dataset.

These include:

- Barron's Arithmetic The Easy Way Fourth Edition - Edward Williams, Katie

Prindle

- Blitzer Introductory Algebra for College Students Fifth Edition - Robert

Blitzer

- McDougal Littell Geometry - Ron Larson, Laurie Boswell, Timothy D. Kanold, Lee

Stiff

- Blitzer Intermediate Algebra for College Students 5th Edition - Robert Blitzer

- Trigonometry Sixth Edition - Charles P. McKeague, Mark D. Turner

- Pearson College Algebra Fourth Edition - Robert F. Blitzer

- Hughes-Hallet Applied Calculus 5th Edition - Deborah Hughes-Hallett, Andrew M.

Gleason, Patti Frazer Lock, Daniel E. Flath, Sheldon P. Gordon, David O.

Lomen, David Lovelock, William G. McCallum, Brad G. Osgood, Andrew Pasquale,

Jeff Tecosky-Feldman, Joseph Thrash, Karen R. Rhea, Thomas W. Tucker

- CLRS Introduction to Algorithms Third Edition - Thomas H. Cormen, Charles E.

Leiserson, Ronald L. Rivest, Clifford Stein

In addition to these authors, the developers of OpenAI's GPT-3.5 and GPT-4

models also contributed to the creation of the language data, as these models

were used to transform the source material into a dialogue format.

### Annotations

#### Annotation process

The dataset does not contain any explicit annotations. However, the data is

curated and synthesized using OpenAI's GPT-3.5 and GPT-4 models. The process

involves transforming the source material into a dialogue format suitable for

training the phi-1 model. The dataset creator, an independent learner with a

strong interest in computer science, reviewed and curated the synthesized

dialogues to ensure their quality and relevance.

#### Who are the annotators?

The dataset creator, an independent learner who has studied computer science

extensively in a self-directed manner, performed the curation and review of the

synthesized dialogues.

### Personal and Sensitive Information

The dataset does not contain any personal or sensitive information. All the data

is derived from publicly available textbooks and online resources. Any names or

other potential identifiers in the source material have been removed or

anonymized.

### Social Impact of Dataset

The dataset is intended to support the development of AI models capable of

providing detailed explanations and examples in the context of arithmetic,

algebra, geometry, trigonometry, calculus, algorithms and data structures,

design patterns, and the python programming language. The potential social

impact is significant, as such models could greatly enhance self-directed

learning and provide valuable educational support to students worldwide.

However, it's important to note that the quality and usefulness of the AI models

trained on this dataset will depend on the quality of the data itself. If the

data is inaccurate or biased, the models could propagate these inaccuracies and

biases, potentially leading to misinformation or unfair outcomes.

### Discussion of Biases

The dataset is based on a variety of textbooks and online resources, which may

contain their own inherent biases. For example, textbooks often reflect the

perspectives and biases of their authors, which can influence the way

information is presented. These biases could potentially be reflected in the

dataset and in any models trained on it.

### Other Known Limitations

At this stage of the dataset creation process, it's difficult to identify all

potential limitations. However, one potential limitation is that the dataset may

not cover all possible topics or perspectives within the fields it addresses.

The dataset creator will continue to monitor and assess the dataset for

limitations as the work progresses.

## Additional Information

### Dataset Curators

The dataset was curated by an independent learner with a strong interest in

computer science. The curator has studied the subject matter in a self-directed

manner, using a variety of resources including textbooks and online materials.

The curation process also involved the use of OpenAI's GPT-3.5 and GPT-4 models

to synthesize dialogues based on the source material.

### Licensing Information

This dataset is released under the Creative Commons

Attribution-NonCommercial-ShareAlike 3.0 International (CC BY-NC-SA 3.0)

license.

### Citation Information

As this dataset is a compilation of various sources synthesized and curated for

the purpose of training the phi-1 model, please ensure to cite the original

sources when using this dataset. If referencing the dataset directly, please

refer to this repository.