---

license: cc-by-sa-3.0

task_categories:

- question-answering

- summarization

language:

- en

size_categories:

- 10K<n<100K

---

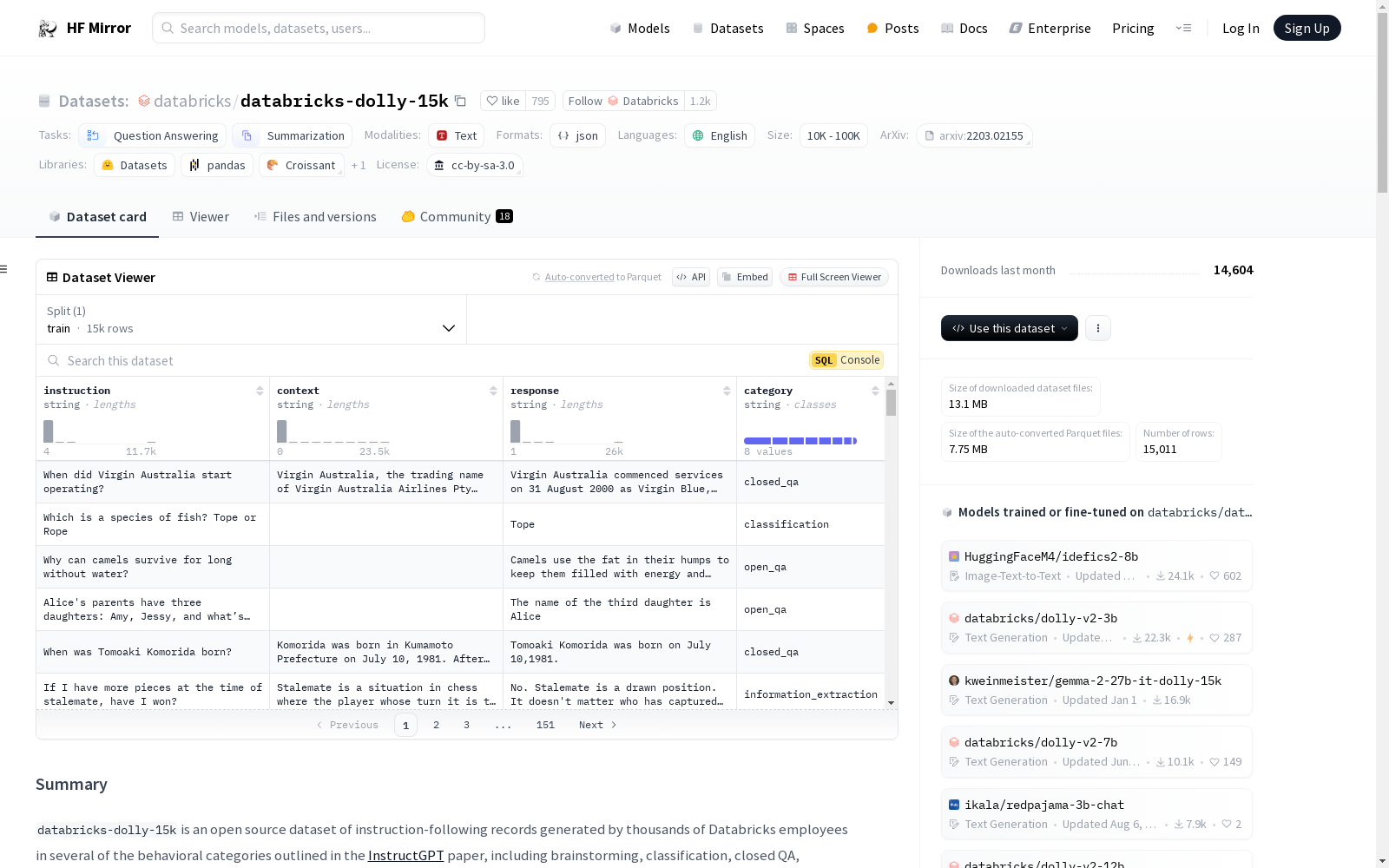

# Summary

`databricks-dolly-15k` is an open source dataset of instruction-following records generated by thousands of Databricks employees in several

of the behavioral categories outlined in the [InstructGPT](https://arxiv.org/abs/2203.02155) paper, including brainstorming, classification,

closed QA, generation, information extraction, open QA, and summarization.

This dataset can be used for any purpose, whether academic or commercial, under the terms of the

[Creative Commons Attribution-ShareAlike 3.0 Unported License](https://creativecommons.org/licenses/by-sa/3.0/legalcode).

Supported Tasks:

- Training LLMs

- Synthetic Data Generation

- Data Augmentation

Languages: English

Version: 1.0

**Owner: Databricks, Inc.**

# Dataset Overview

`databricks-dolly-15k` is a corpus of more than 15,000 records generated by thousands of Databricks employees to enable large language

models to exhibit the magical interactivity of ChatGPT.

Databricks employees were invited to create prompt / response pairs in each of eight different instruction categories, including

the seven outlined in the InstructGPT paper, as well as an open-ended free-form category. The contributors were instructed to avoid using

information from any source on the web with the exception of Wikipedia (for particular subsets of instruction categories), and explicitly

instructed to avoid using generative AI in formulating instructions or responses. Examples of each behavior were provided to motivate the

types of questions and instructions appropriate to each category.

Halfway through the data generation process, contributors were given the option of answering questions posed by other contributors.

They were asked to rephrase the original question and only select questions they could be reasonably expected to answer correctly.

For certain categories contributors were asked to provide reference texts copied from Wikipedia. Reference text (indicated by the `context`

field in the actual dataset) may contain bracketed Wikipedia citation numbers (e.g. `[42]`) which we recommend users remove for downstream applications.

# Intended Uses

While immediately valuable for instruction fine tuning large language models, as a corpus of human-generated instruction prompts,

this dataset also presents a valuable opportunity for synthetic data generation in the methods outlined in the Self-Instruct paper.

For example, contributor--generated prompts could be submitted as few-shot examples to a large open language model to generate a

corpus of millions of examples of instructions in each of the respective InstructGPT categories.

Likewise, both the instructions and responses present fertile ground for data augmentation. A paraphrasing model might be used to

restate each prompt or short responses, with the resulting text associated to the respective ground-truth sample. Such an approach might

provide a form of regularization on the dataset that could allow for more robust instruction-following behavior in models derived from

these synthetic datasets.

# Dataset

## Purpose of Collection

As part of our continuing commitment to open source, Databricks developed what is, to the best of our knowledge, the first open source,

human-generated instruction corpus specifically designed to enable large language models to exhibit the magical interactivity of ChatGPT.

Unlike other datasets that are limited to non-commercial use, this dataset can be used, modified, and extended for any purpose, including

academic or commercial applications.

## Sources

- **Human-generated data**: Databricks employees were invited to create prompt / response pairs in each of eight different instruction categories.

- **Wikipedia**: For instruction categories that require an annotator to consult a reference text (information extraction, closed QA, summarization)

contributors selected passages from Wikipedia for particular subsets of instruction categories. No guidance was given to annotators as to how to select the

target passages.

## Annotator Guidelines

To create a record, employees were given a brief description of the annotation task as well as examples of the types of prompts typical

of each annotation task. Guidelines were succinct by design so as to encourage a high task completion rate, possibly at the cost of

rigorous compliance to an annotation rubric that concretely and reliably operationalizes the specific task. Caveat emptor.

The annotation guidelines for each of the categories are as follows:

- **Creative Writing**: Write a question or instruction that requires a creative, open-ended written response. The instruction should be reasonable to ask of a person with general world knowledge and should not require searching. In this task, your prompt should give very specific instructions to follow. Constraints, instructions, guidelines, or requirements all work, and the more of them the better.

- **Closed QA**: Write a question or instruction that requires factually correct response based on a passage of text from Wikipedia. The question can be complex and can involve human-level reasoning capabilities, but should not require special knowledge. To create a question for this task include both the text of the question as well as the reference text in the form.

- **Open QA**: Write a question that can be answered using general world knowledge or at most a single search. This task asks for opinions and facts about the world at large and does not provide any reference text for consultation.

- **Summarization**: Give a summary of a paragraph from Wikipedia. Please don't ask questions that will require more than 3-5 minutes to answer. To create a question for this task include both the text of the question as well as the reference text in the form.

- **Information Extraction**: These questions involve reading a paragraph from Wikipedia and extracting information from the passage. Everything required to produce an answer (e.g. a list, keywords etc) should be included in the passages. To create a question for this task include both the text of the question as well as the reference text in the form.

- **Classification**: These prompts contain lists or examples of entities to be classified, e.g. movie reviews, products, etc. In this task the text or list of entities under consideration is contained in the prompt (e.g. there is no reference text.). You can choose any categories for classification you like, the more diverse the better.

- **Brainstorming**: Think up lots of examples in response to a question asking to brainstorm ideas.

## Personal or Sensitive Data

This dataset contains public information (e.g., some information from Wikipedia). To our knowledge, there are no private person’s personal identifiers or sensitive information.

## Language

American English

# Known Limitations

- Wikipedia is a crowdsourced corpus and the contents of this dataset may reflect the bias, factual errors and topical focus found in Wikipedia

- Some annotators may not be native English speakers

- Annotator demographics and subject matter may reflect the makeup of Databricks employees

# Citation

```

@online{DatabricksBlog2023DollyV2,

author = {Mike Conover and Matt Hayes and Ankit Mathur and Jianwei Xie and Jun Wan and Sam Shah and Ali Ghodsi and Patrick Wendell and Matei Zaharia and Reynold Xin},

title = {Free Dolly: Introducing the World's First Truly Open Instruction-Tuned LLM},

year = {2023},

url = {https://www.databricks.com/blog/2023/04/12/dolly-first-open-commercially-viable-instruction-tuned-llm},

urldate = {2023-06-30}

}

```

# License/Attribution

**Copyright (2023) Databricks, Inc.**

This dataset was developed at Databricks (https://www.databricks.com) and its use is subject to the CC BY-SA 3.0 license.

Certain categories of material in the dataset include materials from the following sources, licensed under the CC BY-SA 3.0 license:

Wikipedia (various pages) - https://www.wikipedia.org/

Copyright © Wikipedia editors and contributors.