---

dataset_info:

- config_name: ai2d

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 435362437.84770346

num_examples: 2434

download_size: 438136609

dataset_size: 435362437.84770346

- config_name: aokvqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 871997710.0

num_examples: 16539

download_size: 893265070

dataset_size: 871997710.0

- config_name: chart2text

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 1060566797.2728182

num_examples: 26961

download_size: 1103141721

dataset_size: 1060566797.2728182

- config_name: chartqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 784719364.9441738

num_examples: 18265

download_size: 803192402

dataset_size: 784719364.9441738

- config_name: clevr

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 11522617868.0

num_examples: 70000

download_size: 13267429872

dataset_size: 11522617868.0

- config_name: clevr_math

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 13308311206.0

num_examples: 70000

download_size: 16315284

dataset_size: 13308311206.0

- config_name: cocoqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 2213960474.0

num_examples: 46287

download_size: 2393991009

dataset_size: 2213960474.0

- config_name: datikz

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 481233278.0

num_examples: 47974

download_size: 613100257

dataset_size: 481233278.0

- config_name: diagram_image_to_text

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 18877197.0

num_examples: 300

download_size: 18706661

dataset_size: 18877197.0

- config_name: docvqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 6885686042.0

num_examples: 10189

download_size: 6887803845

dataset_size: 6885686042.0

- config_name: dvqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 3689940101.0

num_examples: 200000

download_size: 4295254110

dataset_size: 3689940101.0

- config_name: figureqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 1901887152.0

num_examples: 100000

download_size: 2220036667

dataset_size: 1901887152.0

- config_name: finqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 135268568.0

num_examples: 5276

download_size: 123698250

dataset_size: 135268568.0

- config_name: geomverse

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 951640204.0

num_examples: 9303

download_size: 323746516

dataset_size: 951640204.0

- config_name: hateful_memes

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 3035059823.0

num_examples: 8500

download_size: 3054208907

dataset_size: 3035059823.0

- config_name: hitab

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 161130580.0

num_examples: 2500

download_size: 158295807

dataset_size: 161130580.0

- config_name: iam

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 1129180352.0

num_examples: 5663

download_size: 1128935602

dataset_size: 1129180352.0

- config_name: iconqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 264513634.7170419

num_examples: 27307

download_size: 326674337

dataset_size: 264513634.7170419

- config_name: infographic_vqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 291677986.0

num_examples: 2118

download_size: 292351760

dataset_size: 291677986.0

- config_name: intergps

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 24982328.291771192

num_examples: 1280

download_size: 24870320

dataset_size: 24982328.291771192

- config_name: localized_narratives

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 21380844262.41927

num_examples: 199998

download_size: 22164342699

dataset_size: 21380844262.41927

- config_name: mapqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 3238062926.0

num_examples: 37417

download_size: 3307676486

dataset_size: 3238062926.0

- config_name: mimic_cgd

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 12592929433.0

num_examples: 70939

download_size: 13147641100

dataset_size: 12592929433.0

- config_name: multihiertt

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 1356766489.046

num_examples: 7619

download_size: 1360814135

dataset_size: 1356766489.046

- config_name: nlvr2

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 8375492591.0

num_examples: 50426

download_size: 10838882020

dataset_size: 8375492591.0

- config_name: ocrvqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 5467134439.0

num_examples: 165746

download_size: 6078073015

dataset_size: 5467134439.0

- config_name: okvqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 281454288182.492

num_examples: 9009

download_size: 3009062

dataset_size: 281454288182.492

- config_name: plotqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 7837605221.0

num_examples: 157070

download_size: 5320249066

dataset_size: 7837605221.0

- config_name: raven

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 1506550467.0

num_examples: 42000

download_size: 1720691636

dataset_size: 1506550467.0

- config_name: rendered_text

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 11086896502.0

num_examples: 10000

download_size: 11086960376

dataset_size: 11086896502.0

- config_name: robut_sqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 679135952.0

num_examples: 8514

download_size: 678722272

dataset_size: 679135952.0

- config_name: robut_wikisql

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 5950915477.0

num_examples: 74989

download_size: 6160300141

dataset_size: 5950915477.0

- config_name: robut_wtq

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 4023729236.0

num_examples: 38246

download_size: 4061523247

dataset_size: 4023729236.0

- config_name: scienceqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 284601898.76188564

num_examples: 4976

download_size: 283265438

dataset_size: 284601898.76188564

- config_name: screen2words

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 1670723783.0

num_examples: 15730

download_size: 1346254268

dataset_size: 1670723783.0

- config_name: spot_the_diff

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 1643123792.0

num_examples: 8566

download_size: 1526740548

dataset_size: 1643123792.0

- config_name: st_vqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 696265340.0

num_examples: 17247

download_size: 720462890

dataset_size: 696265340.0

- config_name: tabmwp

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 265337140.19648907

num_examples: 22722

download_size: 306643610

dataset_size: 265337140.19648907

- config_name: tallyqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 4267143189.0

num_examples: 98680

download_size: 4662245152

dataset_size: 4267143189.0

- config_name: tat_qa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 73213942.0

num_examples: 2199

download_size: 70862028

dataset_size: 73213942.0

- config_name: textcaps

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 5938676115.0

num_examples: 21953

download_size: 6175419911

dataset_size: 5938676115.0

- config_name: textvqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 5939437331.0

num_examples: 21953

download_size: 6175442839

dataset_size: 5939437331.0

- config_name: tqa

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 380346870.806369

num_examples: 1493

download_size: 378238311

dataset_size: 380346870.806369

- config_name: vistext

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 541250281.0

num_examples: 9969

download_size: 386023352

dataset_size: 541250281.0

- config_name: visual7w

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 4432168161.0

num_examples: 14366

download_size: 4443083495

dataset_size: 4432168161.0

- config_name: visualmrc

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 2941051627.2639995

num_examples: 3027

download_size: 2912911810

dataset_size: 2941051627.2639995

- config_name: vqarad

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 16561537.0

num_examples: 313

download_size: 16226241

dataset_size: 16561537.0

- config_name: vqav2

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 10630091683.0

num_examples: 82772

download_size: 13479302437

dataset_size: 10630091683.0

- config_name: vsr

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 107489763.0

num_examples: 2157

download_size: 107576214

dataset_size: 107489763.0

- config_name: websight

features:

- name: images

sequence: image

- name: texts

list:

- name: user

dtype: string

- name: assistant

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 2011365901.0

num_examples: 10000

download_size: 1601222161

dataset_size: 2011365901.0

configs:

- config_name: ai2d

data_files:

- split: train

path: ai2d/train-*

- config_name: aokvqa

data_files:

- split: train

path: aokvqa/train-*

- config_name: chart2text

data_files:

- split: train

path: chart2text/train-*

- config_name: chartqa

data_files:

- split: train

path: chartqa/train-*

- config_name: clevr

data_files:

- split: train

path: clevr/train-*

- config_name: clevr_math

data_files:

- split: train

path: clevr_math/train-*

- config_name: cocoqa

data_files:

- split: train

path: cocoqa/train-*

- config_name: datikz

data_files:

- split: train

path: datikz/train-*

- config_name: diagram_image_to_text

data_files:

- split: train

path: diagram_image_to_text/train-*

- config_name: docvqa

data_files:

- split: train

path: docvqa/train-*

- config_name: dvqa

data_files:

- split: train

path: dvqa/train-*

- config_name: figureqa

data_files:

- split: train

path: figureqa/train-*

- config_name: finqa

data_files:

- split: train

path: finqa/train-*

- config_name: geomverse

data_files:

- split: train

path: geomverse/train-*

- config_name: hateful_memes

data_files:

- split: train

path: hateful_memes/train-*

- config_name: hitab

data_files:

- split: train

path: hitab/train-*

- config_name: iam

data_files:

- split: train

path: iam/train-*

- config_name: iconqa

data_files:

- split: train

path: iconqa/train-*

- config_name: infographic_vqa

data_files:

- split: train

path: infographic_vqa/train-*

- config_name: intergps

data_files:

- split: train

path: intergps/train-*

- config_name: localized_narratives

data_files:

- split: train

path: localized_narratives/train-*

- config_name: mapqa

data_files:

- split: train

path: mapqa/train-*

- config_name: mimic_cgd

data_files:

- split: train

path: mimic_cgd/train-*

- config_name: multihiertt

data_files:

- split: train

path: multihiertt/train-*

- config_name: nlvr2

data_files:

- split: train

path: nlvr2/train-*

- config_name: ocrvqa

data_files:

- split: train

path: ocrvqa/train-*

- config_name: okvqa

data_files:

- split: train

path: okvqa/train-*

- config_name: plotqa

data_files:

- split: train

path: plotqa/train-*

- config_name: raven

data_files:

- split: train

path: raven/train-*

- config_name: rendered_text

data_files:

- split: train

path: rendered_text/train-*

- config_name: robut_sqa

data_files:

- split: train

path: robut_sqa/train-*

- config_name: robut_wikisql

data_files:

- split: train

path: robut_wikisql/train-*

- config_name: robut_wtq

data_files:

- split: train

path: robut_wtq/train-*

- config_name: scienceqa

data_files:

- split: train

path: scienceqa/train-*

- config_name: screen2words

data_files:

- split: train

path: screen2words/train-*

- config_name: spot_the_diff

data_files:

- split: train

path: spot_the_diff/train-*

- config_name: st_vqa

data_files:

- split: train

path: st_vqa/train-*

- config_name: tabmwp

data_files:

- split: train

path: tabmwp/train-*

- config_name: tallyqa

data_files:

- split: train

path: tallyqa/train-*

- config_name: tat_qa

data_files:

- split: train

path: tat_qa/train-*

- config_name: textcaps

data_files:

- split: train

path: textcaps/train-*

- config_name: textvqa

data_files:

- split: train

path: textvqa/train-*

- config_name: tqa

data_files:

- split: train

path: tqa/train-*

- config_name: vistext

data_files:

- split: train

path: vistext/train-*

- config_name: visual7w

data_files:

- split: train

path: visual7w/train-*

- config_name: visualmrc

data_files:

- split: train

path: visualmrc/train-*

- config_name: vqarad

data_files:

- split: train

path: vqarad/train-*

- config_name: vqav2

data_files:

- split: train

path: vqav2/train-*

- config_name: vsr

data_files:

- split: train

path: vsr/train-*

- config_name: websight

data_files:

- split: train

path: websight/train-*

---

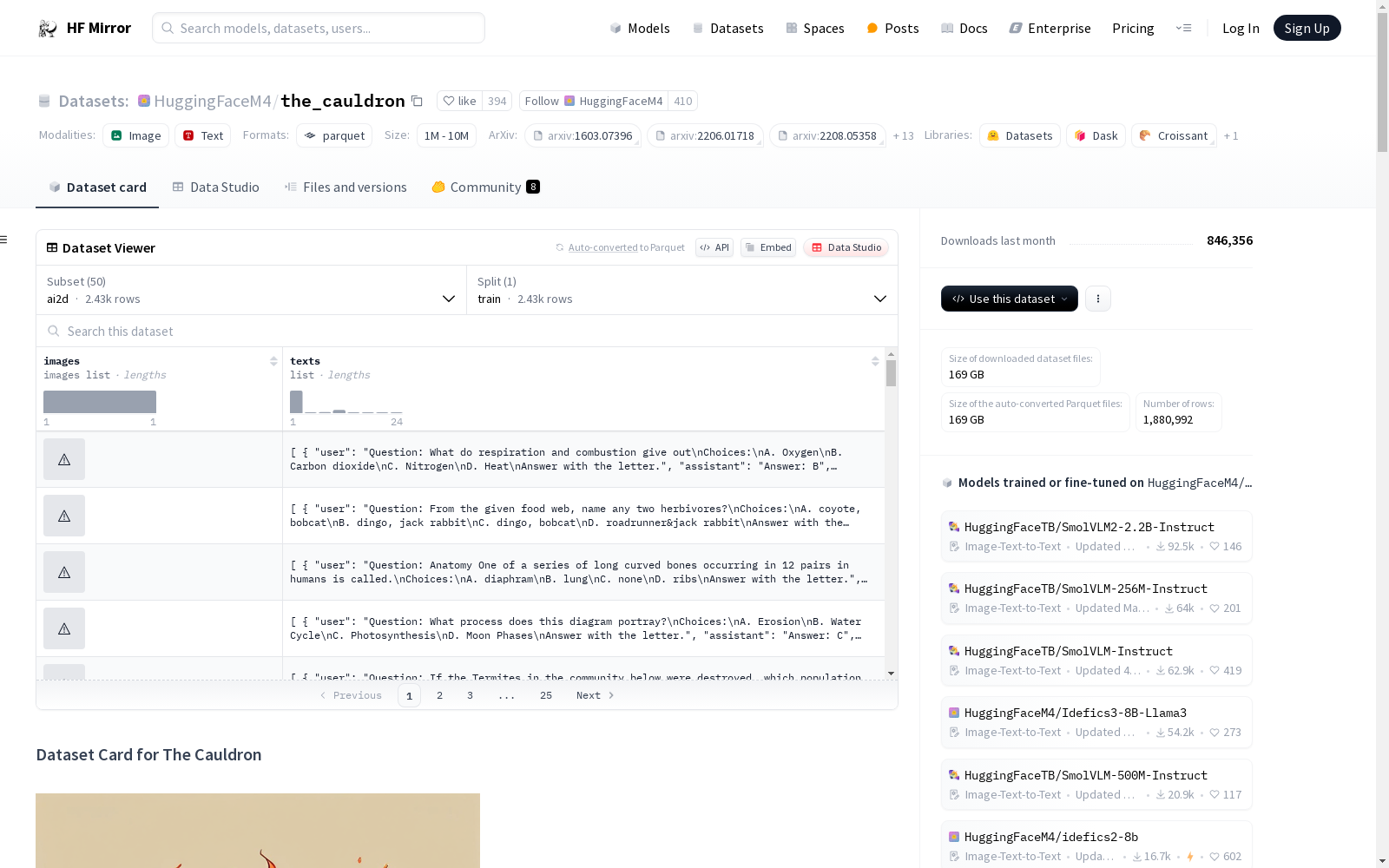

# Dataset Card for The Cauldron

## Dataset description

The Cauldron is part of the Idefics2 release.

It is a massive collection of 50 vision-language datasets (training sets only) that were used for the fine-tuning of the vision-language model Idefics2.

## Load the dataset

To load the dataset, install the library `datasets` with `pip install datasets`. Then,

```

from datasets import load_dataset

ds = load_dataset("HuggingFaceM4/the_cauldron", "ai2d")

```

to download and load the config `ai2d` for example.

## Data fields

An example of a sample looks as follows:

```

{

"images" = [PIL.Image]

"texts" = [

{

"user": "Question: How many actions are depicted in the diagram?\nChoices:\nA. 6.\nB. 4.\nC. 8.\nD. 7.\nAnswer with the letter.",

"assistant": "Answer: D",

"source": "TQA"

}

]

}

```

In `images`, there is a list of images, to be placed before the text.

In `texts`, there is a conversation between a user and an assistant about the images that is represented by a list of turns.

## Stats about the datasets in The Cauldron

| Dataset | # images | # Q/A pairs | # tokens |

|----------------------|----------|-------------|------------|

| *General visual question answering* |

| VQAv2 | 82,772 | 443,757 | 1,595,929 |

| COCO-QA | 46,287 | 78,736 | 286,982 |

| Visual7W | 14,366 | 69,817 | 279,268 |

| A-OKVQA | 16,539 | 17,056 | 236,492 |

| TallyQA | 98,680 | 183,986 | 738,254 |

| OK-VQA | 8,998 | 9,009 | 38,853 |

| HatefulMemes | 8,500 | 8,500 | 25,500 |

| VQA-RAD | 313 | 1,793 | 8,418 |

| Captioning |

| LNarratives | 507,444 | 507,444 | 21,328,731 |

| Screen2Words | 15,730 | 15,743 | 143,103 |

| VSR | 2,157 | 3,354 | 10,062 |

| *OCR, document understanding, text transcription* |

| RenderedText | 999,000 | 999,000 | 27,207,774 |

| DocVQA | 10,189 | 39,463 | 337,829 |

| TextCaps | 21,953 | 21,953 | 389,658 |

| TextVQA | 21,953 | 34,602 | 181,918 |

| ST-VQA | 17,247 | 23,121 | 127,846 |

| OCR-VQA | 165,746 | 801,579 | 6,073,824 |

| VisualMRC | 3,027 | 11,988 | 168,828 |

| IAM | 5,663 | 5,663 | 144,216 |

| InfoVQA | 2,118 | 10,074 | 61,048 |

| Diagram image-to-text| 300 | 300 | 22,196 |

| *Chart/figure understanding* |

| Chart2Text | 26,985 | 30,242 | 2,852,827 |

| DVQA | 200,000 | 2,325,316 | 8,346,234 |

| VisText | 7,057 | 9,969 | 1,245,485 |

| ChartQA | 18,271 | 28,299 | 185,835 |

| PlotQA | 157,070 | 20,249,479 | 8478299.278|

| FigureQA | 100,000 | 1,327,368 | 3,982,104 |

| MapQA | 37,417 | 483,416 | 6,470,485 |

| *Table understanding* |

| TabMWP | 22,729 | 23,059 | 1,948,166 |

| TAT-QA | 2,199 | 13,215 | 283,776 |

| HiTab | 2,500 | 7,782 | 351,299 |

| MultiHiertt | 7,619 | 7,830 | 267,615 |

| FinQA | 5,276 | 6,251 | 242,561 |

| WikiSQL | 74,989 | 86,202 | 9,680,673 |

| SQA | 8,514 | 34,141 | 1,894,824 |

| WTQ | 38,246 | 44,096 | 6,677,013 |

| *Reasoning, logic, maths* |

| GeomVerse | 9,303 | 9,339 | 2,489,459 |

| CLEVR-Math | 70,000 | 788,650 | 3,184,656 |

| CLEVR | 70,000 | 699,989 | 2,396,781 |

| IconQA | 27,315 | 29,859 | 112,969 |

| RAVEN | 42,000 | 42,000 | 105,081 |

| Inter-GPs | 1,451 | 2,101 | 8,404 |

| *Textbook/academic questions* |

| AI2D | 3,099 | 9,708 | 38,832 |

| TQA | 1,496 | 6,501 | 26,004 |

| ScienceQA | 4,985 | 6,218 | 24,872 |

| *Differences between 2 images* |

| NLVR2 | 50,426 | 86,373 | 259,119 |

| GSD | 70,939 | 141,869 | 4,637,229 |

| Spot the diff | 8,566 | 9,524 | 221,477 |

| *Screenshot to code* |

| WebSight | 500,000 | 500,000 | 276,743,299|

| DaTikz | 47,974 | 48,296 | 59,556,252 |

## Decontamination

The Cauldron contains only the train split of each sub-datasets.

On top of that, we removed the few examples containing an image also present in the test splits of MMMU, MathVista or MMBench.

## References to the original datasets

<details>

<summary>References to the original datasets</summary>

@misc{AI2D,

title={A Diagram Is Worth A Dozen Images},

author={Aniruddha Kembhavi and Mike Salvato and Eric Kolve and Minjoon Seo and Hannaneh Hajishirzi and Ali Farhadi},

year={2016},

eprint={1603.07396},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{A-OKVQA,

title={A-OKVQA: A Benchmark for Visual Question Answering using World Knowledge},

author={Dustin Schwenk and Apoorv Khandelwal and Christopher Clark and Kenneth Marino and Roozbeh Mottaghi},

year={2022},

eprint={2206.01718},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{Chart2Text,

title = "Chart-to-Text: Generating Natural Language Descriptions for Charts by Adapting the Transformer Model",

author = "Obeid, Jason and

Hoque, Enamul",

editor = "Davis, Brian and

Graham, Yvette and

Kelleher, John and

Sripada, Yaji",

booktitle = "Proceedings of the 13th International Conference on Natural Language Generation",

month = dec,

year = "2020",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2020.inlg-1.20",

doi = "10.18653/v1/2020.inlg-1.20",

pages = "138--147",

}

@inproceedings{ChartQA,

title = "{C}hart{QA}: A Benchmark for Question Answering about Charts with Visual and Logical Reasoning",

author = "Masry, Ahmed and

Long, Do and

Tan, Jia Qing and

Joty, Shafiq and

Hoque, Enamul",

booktitle = "Findings of the Association for Computational Linguistics: ACL 2022",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.findings-acl.177",

doi = "10.18653/v1/2022.findings-acl.177",

pages = "2263--2279",

}

@misc{CLEVR-Math,

doi = {10.48550/ARXIV.2208.05358},

url = {https://arxiv.org/abs/2208.05358},

author = {Lindström, Adam Dahlgren},

keywords = {Machine Learning (cs.LG), Computation and Language (cs.CL), Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences, I.2.7; I.2.10; I.2.6; I.4.8; I.1.4},

title = {CLEVR-Math: A Dataset for Compositional Language, Visual, and Mathematical Reasoning},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution Share Alike 4.0 International}

}

@misc{CLEVR,

title={CLEVR: A Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning},

author={Justin Johnson and Bharath Hariharan and Laurens van der Maaten and Li Fei-Fei and C. Lawrence Zitnick and Ross Girshick},

year={2016},

eprint={1612.06890},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{CocoQA,

author = {Ren, Mengye and Kiros, Ryan and Zemel, Richard},

booktitle = {Advances in Neural Information Processing Systems},

editor = {C. Cortes and N. Lawrence and D. Lee and M. Sugiyama and R. Garnett},

pages = {},

publisher = {Curran Associates, Inc.},

title = {Exploring Models and Data for Image Question Answering},

url = {https://proceedings.neurips.cc/paper_files/paper/2015/file/831c2f88a604a07ca94314b56a4921b8-Paper.pdf},

volume = {28},

year = {2015}

}

@misc{DaTikz,

title={AutomaTikZ: Text-Guided Synthesis of Scientific Vector Graphics with TikZ},

author={Jonas Belouadi and Anne Lauscher and Steffen Eger},

year={2024},

eprint={2310.00367},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

Diagram image to text: https://huggingface.co/datasets/Kamizuru00/diagram_image_to_text by @Kamizuru00

@INPROCEEDINGS{DocVQA,

author={Mathew, Minesh and Karatzas, Dimosthenis and Jawahar, C. V.},

booktitle={2021 IEEE Winter Conference on Applications of Computer Vision (WACV)},

title={DocVQA: A Dataset for VQA on Document Images},

year={2021},

volume={},

number={},

pages={2199-2208},

keywords={Visualization;Computer vision;Text analysis;Image recognition;Image analysis;Conferences;Layout},

doi={10.1109/WACV48630.2021.00225}}

@inproceedings{DVQA,

title={DVQA: Understanding Data Visualizations via Question Answering},

author={Kafle, Kushal and Cohen, Scott and Price, Brian and Kanan, Christopher},

booktitle={CVPR},

year={2018}

}

@misc{FigureQA,

title={FigureQA: An Annotated Figure Dataset for Visual Reasoning},

author={Samira Ebrahimi Kahou and Vincent Michalski and Adam Atkinson and Akos Kadar and Adam Trischler and Yoshua Bengio},

year={2018},

eprint={1710.07300},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{FinQA,

title = "{F}in{QA}: A Dataset of Numerical Reasoning over Financial Data",

author = "Chen, Zhiyu and

Chen, Wenhu and

Smiley, Charese and

Shah, Sameena and

Borova, Iana and

Langdon, Dylan and

Moussa, Reema and

Beane, Matt and

Huang, Ting-Hao and

Routledge, Bryan and

Wang, William Yang",

editor = "Moens, Marie-Francine and

Huang, Xuanjing and

Specia, Lucia and

Yih, Scott Wen-tau",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2021",

address = "Online and Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.emnlp-main.300",

doi = "10.18653/v1/2021.emnlp-main.300",

pages = "3697--3711",

}

@misc{GeomVerse,

title={GeomVerse: A Systematic Evaluation of Large Models for Geometric Reasoning},

author={Mehran Kazemi and Hamidreza Alvari and Ankit Anand and Jialin Wu and Xi Chen and Radu Soricut},

year={2023},

eprint={2312.12241},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{hatefulmeme,

author = {Kiela, Douwe and Firooz, Hamed and Mohan, Aravind and Goswami, Vedanuj and Singh, Amanpreet and Ringshia, Pratik and Testuggine, Davide},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Larochelle and M. Ranzato and R. Hadsell and M.F. Balcan and H. Lin},

pages = {2611--2624},

publisher = {Curran Associates, Inc.},

title = {The Hateful Memes Challenge: Detecting Hate Speech in Multimodal Memes},

url = {https://proceedings.neurips.cc/paper_files/paper/2020/file/1b84c4cee2b8b3d823b30e2d604b1878-Paper.pdf},

volume = {33},

year = {2020}

}

@inproceedings{Hitab,

title = "{H}i{T}ab: A Hierarchical Table Dataset for Question Answering and Natural Language Generation",

author = "Cheng, Zhoujun and

Dong, Haoyu and

Wang, Zhiruo and

Jia, Ran and

Guo, Jiaqi and

Gao, Yan and

Han, Shi and

Lou, Jian-Guang and

Zhang, Dongmei",

editor = "Muresan, Smaranda and

Nakov, Preslav and

Villavicencio, Aline",

booktitle = "Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.acl-long.78",

doi = "10.18653/v1/2022.acl-long.78",

pages = "1094--1110",

}

@article{IAM,

author = {Marti, Urs-Viktor and Bunke, H.},

year = {2002},

month = {11},

pages = {39-46},

title = {The IAM-database: An English sentence database for offline handwriting recognition},

volume = {5},

journal = {International Journal on Document Analysis and Recognition},

doi = {10.1007/s100320200071}

}

@inproceedings{IconQA,

title = {IconQA: A New Benchmark for Abstract Diagram Understanding and Visual Language Reasoning},

author = {Lu, Pan and Qiu, Liang and Chen, Jiaqi and Xia, Tony and Zhao, Yizhou and Zhang, Wei and Yu, Zhou and Liang, Xiaodan and Zhu, Song-Chun},

booktitle = {The 35th Conference on Neural Information Processing Systems (NeurIPS) Track on Datasets and Benchmarks},

year = {2021}

}

@INPROCEEDINGS{InfographicVQA,

author={Mathew, Minesh and Bagal, Viraj and Tito, Rubèn and Karatzas, Dimosthenis and Valveny, Ernest and Jawahar, C. V.},

booktitle={2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

title={InfographicVQA},

year={2022},

volume={},

number={},

pages={2582-2591},

keywords={Visualization;Computer vision;Computational modeling;Layout;Data visualization;Benchmark testing;Brain modeling;Document Analysis Datasets;Evaluation and Comparison of Vision Algorithms;Vision and Languages},

doi={10.1109/WACV51458.2022.00264}

}

@inproceedings{Inter-GPS,

title = {Inter-GPS: Interpretable Geometry Problem Solving with Formal Language and Symbolic Reasoning},

author = {Lu, Pan and Gong, Ran and Jiang, Shibiao and Qiu, Liang and Huang, Siyuan and Liang, Xiaodan and Zhu, Song-Chun},

booktitle = {The Joint Conference of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL-IJCNLP 2021)},

year = {2021}

}

@misc{LocalizedNarratives,

title={Connecting Vision and Language with Localized Narratives},

author={Jordi Pont-Tuset and Jasper Uijlings and Soravit Changpinyo and Radu Soricut and Vittorio Ferrari},

year={2020},

eprint={1912.03098},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{MapQA,

title={MapQA: A Dataset for Question Answering on Choropleth Maps},

author={Shuaichen Chang and David Palzer and Jialin Li and Eric Fosler-Lussier and Ningchuan Xiao},

year={2022},

eprint={2211.08545},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{MIMIC-IT-General-Scene-Difference,

title={MIMIC-IT: Multi-Modal In-Context Instruction Tuning},

author={Bo Li and Yuanhan Zhang and Liangyu Chen and Jinghao Wang and Fanyi Pu and Jingkang Yang and Chunyuan Li and Ziwei Liu},

year={2023},

eprint={2306.05425},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{Multihiertt,

title = "{M}ulti{H}iertt: Numerical Reasoning over Multi Hierarchical Tabular and Textual Data",

author = "Zhao, Yilun and

Li, Yunxiang and

Li, Chenying and

Zhang, Rui",

booktitle = "Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.acl-long.454",

pages = "6588--6600",

}

@inproceedings{NLVR2,

title = "A Corpus for Reasoning about Natural Language Grounded in Photographs",

author = "Suhr, Alane and

Zhou, Stephanie and

Zhang, Ally and

Zhang, Iris and

Bai, Huajun and

Artzi, Yoav",

editor = "Korhonen, Anna and

Traum, David and

M{\`a}rquez, Llu{\'\i}s",

booktitle = "Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2019",

address = "Florence, Italy",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/P19-1644",

doi = "10.18653/v1/P19-1644",

pages = "6418--6428",

}

@INPROCEEDINGS{OCR-VQA,

author={Mishra, Anand and Shekhar, Shashank and Singh, Ajeet Kumar and Chakraborty, Anirban},

booktitle={2019 International Conference on Document Analysis and Recognition (ICDAR)},

title={OCR-VQA: Visual Question Answering by Reading Text in Images},

year={2019},

volume={},

number={},

pages={947-952},

keywords={Optical character recognition software;Visualization;Task analysis;Knowledge discovery;Text analysis;Text recognition;Character recognition;Optical Character Recognition (OCR), Visual Question Answering (VQA), Document image analysis, textVQA},

doi={10.1109/ICDAR.2019.00156}

}

@InProceedings{okvqa,

author = {Kenneth Marino and Mohammad Rastegari and Ali Farhadi and Roozbeh Mottaghi},

title = {OK-VQA: A Visual Question Answering Benchmark Requiring External Knowledge},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2019},

}

@InProceedings{PlotQA,

author = {Methani, Nitesh and Ganguly, Pritha and Khapra, Mitesh M. and Kumar, Pratyush},

title = {PlotQA: Reasoning over Scientific Plots},

booktitle = {The IEEE Winter Conference on Applications of Computer Vision (WACV)},

month = {March},

year = {2020}

}

@inproceedings{RAVEN,

title={RAVEN: A Dataset for Relational and Analogical Visual rEasoNing},

author={Zhang, Chi and Gao, Feng and Jia, Baoxiong and Zhu, Yixin and Zhu, Song-Chun},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}

RenderedText: https://huggingface.co/datasets/wendlerc/RenderedText by @wendlerc

@inproceedings{Robut,

title = "{R}obu{T}: A Systematic Study of Table {QA} Robustness Against Human-Annotated Adversarial Perturbations",

author = "Zhao, Yilun and

Zhao, Chen and

Nan, Linyong and

Qi, Zhenting and

Zhang, Wenlin and

Tang, Xiangru and

Mi, Boyu and

Radev, Dragomir",

editor = "Rogers, Anna and

Boyd-Graber, Jordan and

Okazaki, Naoaki",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.334",

doi = "10.18653/v1/2023.acl-long.334",

pages = "6064--6081",

}

@inproceedings{SQA,

title = "Search-based Neural Structured Learning for Sequential Question Answering",

author = "Iyyer, Mohit and

Yih, Wen-tau and

Chang, Ming-Wei",

editor = "Barzilay, Regina and

Kan, Min-Yen",

booktitle = "Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2017",

address = "Vancouver, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/P17-1167",

doi = "10.18653/v1/P17-1167",

pages = "1821--1831",

}

@misc{WikiSQL,

title={Seq2SQL: Generating Structured Queries from Natural Language using Reinforcement Learning},

author={Victor Zhong and Caiming Xiong and Richard Socher},

year={2017},

eprint={1709.00103},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@inproceedings{WTQ,

title = "Compositional Semantic Parsing on Semi-Structured Tables",

author = "Pasupat, Panupong and

Liang, Percy",

editor = "Zong, Chengqing and

Strube, Michael",

booktitle = "Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers)",

month = jul,

year = "2015",

address = "Beijing, China",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/P15-1142",

doi = "10.3115/v1/P15-1142",

pages = "1470--1480",

}

@inproceedings{ScienceQA,

author = {Lu, Pan and Mishra, Swaroop and Xia, Tanglin and Qiu, Liang and Chang, Kai-Wei and Zhu, Song-Chun and Tafjord, Oyvind and Clark, Peter and Kalyan, Ashwin},

booktitle = {Advances in Neural Information Processing Systems},

editor = {S. Koyejo and S. Mohamed and A. Agarwal and D. Belgrave and K. Cho and A. Oh},

pages = {2507--2521},

publisher = {Curran Associates, Inc.},

title = {Learn to Explain: Multimodal Reasoning via Thought Chains for Science Question Answering},

url = {https://proceedings.neurips.cc/paper_files/paper/2022/file/11332b6b6cf4485b84afadb1352d3a9a-Paper-Conference.pdf},

volume = {35},

year = {2022}

}

@inproceedings{screen2words,

author = {Wang, Bryan and Li, Gang and Zhou, Xin and Chen, Zhourong and Grossman, Tovi and Li, Yang},

title = {Screen2Words: Automatic Mobile UI Summarization with Multimodal Learning},

year = {2021},

isbn = {9781450386357},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3472749.3474765},

doi = {10.1145/3472749.3474765},

booktitle = {The 34th Annual ACM Symposium on User Interface Software and Technology},

pages = {498–510},

numpages = {13},

keywords = {Mobile UI summarization, dataset., deep learning, language-based UI, screen understanding},

location = {Virtual Event, USA},

series = {UIST '21}

}

@inproceedings{SpotTheDiff,

title = "Learning to Describe Differences Between Pairs of Similar Images",

author = "Jhamtani, Harsh and

others",

editor = "Riloff, Ellen and

Chiang, David and

Hockenmaier, Julia and

Tsujii, Jun{'}ichi",

booktitle = "Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing",

month = oct # "-" # nov,

year = "2018",

address = "Brussels, Belgium",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/D18-1436",

doi = "10.18653/v1/D18-1436",

pages = "4024--4034",

}

@INPROCEEDINGS{STVQA,

author={Biten, Ali Furkan and Tito, Rubèn and Mafla, Andrés and Gomez, Lluis and Rusiñol, Marçal and Jawahar, C.V. and Valveny, Ernest and Karatzas, Dimosthenis},

booktitle={2019 IEEE/CVF International Conference on Computer Vision (ICCV)},

title={Scene Text Visual Question Answering},

year={2019},

volume={},

number={},

pages={4290-4300},

keywords={Visualization;Task analysis;Knowledge discovery;Text recognition;Cognition;Computer vision;Semantics},

doi={10.1109/ICCV.2019.00439}

}

@inproceedings{TabMWP,

title={Dynamic Prompt Learning via Policy Gradient for Semi-structured Mathematical Reasoning},

author={Lu, Pan and Qiu, Liang and Chang, Kai-Wei and Wu, Ying Nian and Zhu, Song-Chun and Rajpurohit, Tanmay and Clark, Peter and Kalyan, Ashwin},

booktitle={International Conference on Learning Representations (ICLR)},

year={2023}

}

@inproceedings{TallyQA,

title={TallyQA: Answering Complex Counting Questions},

author={Acharya, Manoj and Kafle, Kushal and Kanan, Christopher},

booktitle={AAAI},

year={2019}

}

@inproceedings{TAT-QA,

title = "{TAT}-{QA}: A Question Answering Benchmark on a Hybrid of Tabular and Textual Content in Finance",

author = "Zhu, Fengbin and

Lei, Wenqiang and

Huang, Youcheng and

Wang, Chao and

Zhang, Shuo and

Lv, Jiancheng and

Feng, Fuli and

Chua, Tat-Seng",

booktitle = "Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers)",

month = aug,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.acl-long.254",

doi = "10.18653/v1/2021.acl-long.254",

pages = "3277--3287"

}

@misc{textcaps,

title={TextCaps: a Dataset for Image Captioning with Reading Comprehension},

author={Oleksii Sidorov and Ronghang Hu and Marcus Rohrbach and Amanpreet Singh},

year={2020},

eprint={2003.12462},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@inproceedings{textvqa,

title={Towards VQA Models That Can Read},

author={Singh, Amanpreet and Natarjan, Vivek and Shah, Meet and Jiang, Yu and Chen, Xinlei and Parikh, Devi and Rohrbach, Marcus},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={8317-8326},

year={2019}

}

@INPROCEEDINGS{TQA,

author={Kembhavi, Aniruddha and Seo, Minjoon and Schwenk, Dustin and Choi, Jonghyun and Farhadi, Ali and Hajishirzi, Hannaneh},

booktitle={2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

title={Are You Smarter Than a Sixth Grader? Textbook Question Answering for Multimodal Machine Comprehension},

year={2017},

volume={},

number={},

pages={5376-5384},

keywords={Knowledge discovery;Visualization;Cognition;Training;Natural languages;Computer vision},

doi={10.1109/CVPR.2017.571}

}

@inproceedings{VisText,

title = {{VisText: A Benchmark for Semantically Rich Chart Captioning}},

author = {Benny J. Tang AND Angie Boggust AND Arvind Satyanarayan},

booktitle = {The Annual Meeting of the Association for Computational Linguistics (ACL)},

year = {2023},

url = {http://vis.csail.mit.edu/pubs/vistext}

}

@InProceedings{Visual7w,

title = {{Visual7W: Grounded Question Answering in Images}},

author = {Yuke Zhu and Oliver Groth and Michael Bernstein and Li Fei-Fei},

booktitle = {{IEEE Conference on Computer Vision and Pattern Recognition}},

year = 2016,

}

@inproceedings{VisualMRC,

author = {Ryota Tanaka and

Kyosuke Nishida and

Sen Yoshida},

title = {VisualMRC: Machine Reading Comprehension on Document Images},

booktitle = {AAAI},

year = {2021}

}

@article{VQA-RAD,

author = {Lau, Jason and Gayen, Soumya and Ben Abacha, Asma and Demner-Fushman, Dina},

year = {2018},

month = {11},

pages = {180251},

title = {A dataset of clinically generated visual questions and answers about radiology images},

volume = {5},

journal = {Scientific Data},

doi = {10.1038/sdata.2018.251}

}

@misc{VQAv2,

title={Making the V in VQA Matter: Elevating the Role of Image Understanding in Visual Question Answering},

author={Yash Goyal and Tejas Khot and Douglas Summers-Stay and Dhruv Batra and Devi Parikh},

year={2017},

eprint={1612.00837},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{VSR,

title={Visual Spatial Reasoning},

author={Fangyu Liu and Guy Emerson and Nigel Collier},

year={2023},

eprint={2205.00363},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{WebSight,

title={Unlocking the conversion of Web Screenshots into HTML Code with the WebSight Dataset},

author={Hugo Laurençon and Léo Tronchon and Victor Sanh},

year={2024},

eprint={2403.09029},

archivePrefix={arXiv},

primaryClass={cs.HC}

}

</details>

## Licensing Information

Each of the publicly available sub-datasets present in the Cauldron are governed by specific licensing conditions. Therefore, when making use of them you must take into consideration each of the licenses governing each dataset.

To the extent we have any rights in the prompts, these are licensed under CC-BY-4.0.

## Citation Information

If you are using this dataset, please cite

```

@misc{laurençon2024matters,

title={What matters when building vision-language models?},

author={Hugo Laurençon and Léo Tronchon and Matthieu Cord and Victor Sanh},

year={2024},

eprint={2405.02246},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```